|

|

University of Leicester |

School of Computing and Mathematical Sciences |

E mail: sy237(at)le.ac.uk

|

| Research | Publications | Home | Short CV |

Research InterestsMy research focuses on multiple disciplinary approaches for solving real world problems with inspirations and methodologies from computer vision, artificial intelligence, biology, neuroscience and psychology. Specific applications of our research are in robotics, intelligent vehicles, security, and healthcare. I am extremly interested in how animal's visual systems work to interact with outside world and how to simulate/realize such complex systems in software/hardware for various applications.

The following keywords may be able to illustrate the specific

research interests and previous research experiences I have:

|

Projects |

|

My research has been supported by EU FP6/FP7, EU Horizon 2020, UK Government, Alexander von Humboldt Foundation (AvH), Home Office, NTT, UoL and other public funding bodies. Their generious supports are very much appreciated. Some of the projects are listed as below. |

|

ULTRACEPT: Ultra-layered perception with brain-inspired information processing for vehicle collision avoidance (EU Horizon 2020, project coordinator, 2018-2024). The primary objective of this project is to develop a trustworthy vehicle collision detection system inspired by animals visual brain via trans-institutional collaboration. Processing multiple modalities sensor data inputs, such as vision and thermal maps with innovative visual brain-inspired algorithms, the project will embrace multidisciplinary and cross-continental academia and industry cooperation in the field of bio-inspired visual neural systems, for collision detection, both in normal and in complex and/or in extreme environments. Project website, summary clip, and video. |

|

ENRICHME: Enabling Robot and assisted living environment for Independent Care and Health Monitoring of the Elderly (EU Horizon 2020, CoPI, 2015-2018). ENRICHME tackles the progressive decline of cognitive capacity in the ageing population proposing an integrated platform for Ambient Assisted Living (AAL) with a mobile service robot for long-term human monitoring and interaction, which helps the elderly to remain independent and active for longer. Project on Facebook (ENRICHME), short clips about the project video1, video2, video3. |

|

STEP2DYNA: Spatial-temporal information processing for collision detection in dynamic environments (EU Horizon 2020, project coordinator, 2016-2021). Autonomous unmanned aerial vehicles (UAVs), which have demonstrated great potential in serving human society such as delivering goods to households and precision farming, are restricted due to lacking collision detection capability in complex dynamic environments mixed with people, pets, building and wires. This project brings together 11 world-class research teams and European SME each with specialised expertise and proposes an innovative bio-inspired sensor system for UAVs collision detection. Project website, video, and short clip. |

|

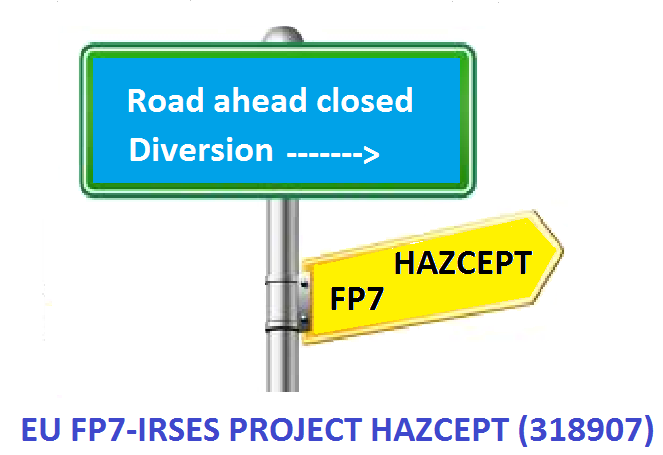

HAZCEPT: Towards zero road accidents - nature inspired hazard perception (EU FP7, coordinator). The number of road traffic accident fatalities world wide has recently reached 1.3 million each year, with between 20 and 50 million injuries being caused by road accidents. In theory, all accidents can be avoided. Studies showed that more than 90% road accidents are caused by or related to human error. Developing an efficient system that can detect hazardous situations robustly is the key to reduce road accidents. This HAZCEPT consortium will focus on automatic hazard scene recognition for safe driving. HAZCEPT will address the hazard recognition from three aspects - lower visual level, cogintive level, and drivers' factors in the safe driving loop. |

|

LIVCODE: Life like information processing for robust collision detection (EU FP7, coordinator). Animals are especially good at collision avoidance even in a dense swarm. In the future, every kind of man made moving machine, such as ground vehicles, robots, UAVs aeroplanes, boats, even moving toys, should have the same ability to avoid collision with other things, if a robust collision detection sensor is available. The six partners of this EU FP7 project from UK, Germany, Japan and China will further look into insects visual pathways and take inspirations from animal vision systems to explore robust embedded solutions for vision based collision detection for future intelligent machines. |

|

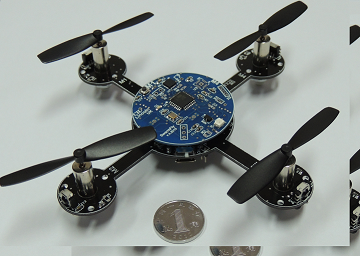

Mini UAVs with size of a hand are specially designed for study swarm intelligence. They may also be used for other application areas, for example, as platform for fly robots coordination research, collision avoidance research, surveillance, human robot interaction, and even take part in rescue mission. |

|

DiPP: detecting hostile intent by measuring psychological and physiological reactions to stimuli (UK, HO, PI). This is a fascinating project which is tackling the huge challenge with innovative ideas. The feasibility study has proved the concept and methodologies. We are taking steps to push this idea forward further. Investors and/or potential collaborators are welcome to contact me for the involvment of further developments for industrial applications. |

|

Pedstrian: pedestrian collision detection with bio-inspired neural networks (Fellowship). In this project, only the pedestrians are in the collision course or are walking into the collision course to a moving vehcile are monitored and calculated with hierarchical neural networks. Several types of bio-inspired neural networks are combined to get a better performance for pedestrian collision detection. |

|

Evolving of bio-plausible neural systems to control aerial agents (UoL). This project is looking for ways to evolve visual based neural controllor for autonomous agents. These agents are initially evolved in 3D virtual environments, and the best performed agent will be tested with its physical counteparts flying or running in the real physical world. The platform developed and used in this study can be acessed via Mark Smith's page altURI if you have further interest. |

|

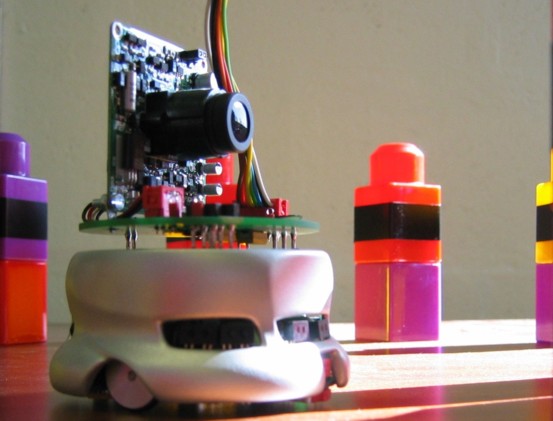

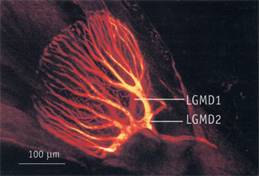

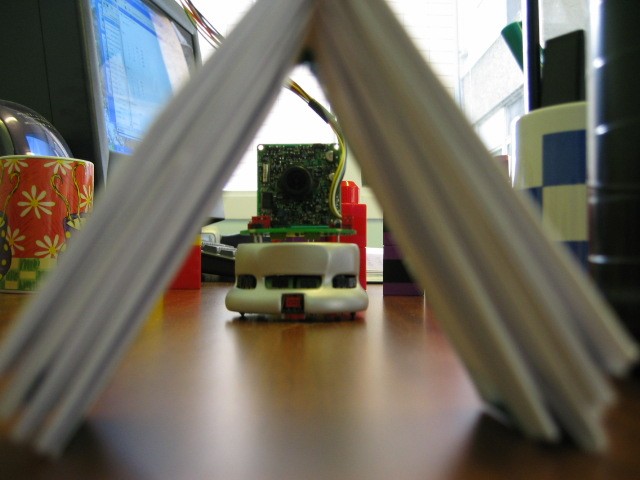

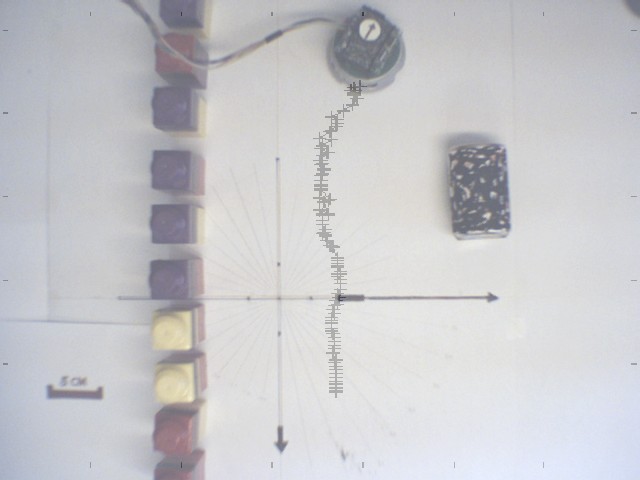

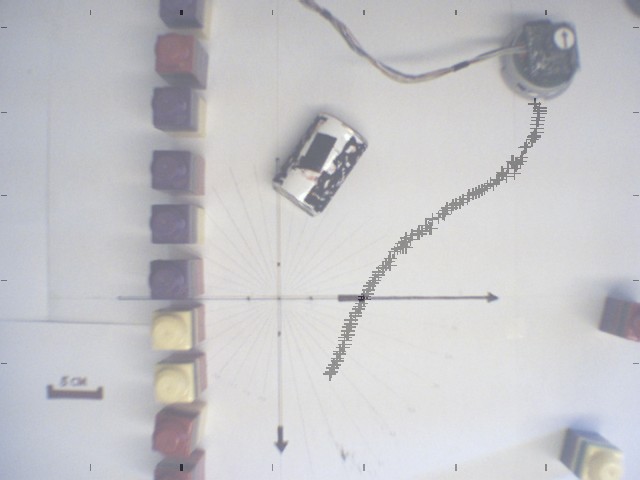

Vehicle or robot collision detection inspired from locust visual pathway (LOCUST, EU). Life like image processing inspired from locust visual pathway are used for vehicle collision detection. |

|

With a pair of LGMDs, a robot can navigate easily in a dynamic environment, click [video] on YouTube, to see how it changes its moving course to avoid the rolling can. The short movie presented in AISB'07 is on YouTube [movie] (90s), click to see how a robotic 'locust' with panaromic vision behaves reasonably to approaching objects. |

|

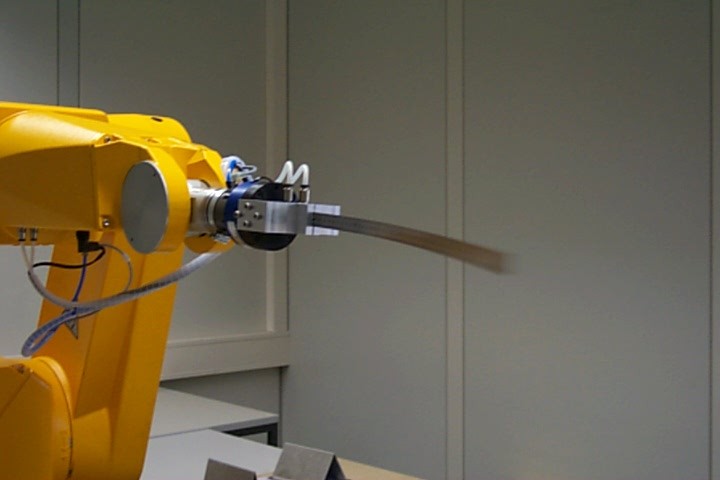

Robotic Manipulation Skills: intelligent robotic systems can be constructed hierachically start from primary skills. Each of these skills can deal with similar tasks in similar stituations. Taking manipulating soft/flexible objects as an example - to insert an elastic object into a hole efficiently, the robotic arm can, either to damp the vibration very quickly as shown in the video clips [damp1] with PID or [damp2] with fussy controller, or do the insertion directly if knowing the status of the deformable object with the help of different sensors and models as shown in the two video clips [FastInsertion1] and [FastInsertion2]. |

|

|

| Research | Publications | Home | Short CV |